Extraction of Objects In Images and Videos Using 5 Lines of Code.

Computer vision is the medium through which computers see and identify objects. The goal of computer vision is to make it possible for computers to analyze objects in images and videos, to solve different vision problems. Object Segmentation has paved way for convenient analysis of objects in images and videos, contributing immensely to different fields, such as medical, vision in self driving cars and background editing in images and videos.

PixelLib is a library created for easy integration of image and video segmentation in real life applications. PixelLib has employed the powerful techniques of object segmentation to make computer vision accessible to everyone. I am excited to announce that the new version of PixelLib has made analysis of objects in computer vision easier than ever. PixelLib uses segmentation techniques to implement objects’ extraction in images and videos using five lines of code.

Install PixelLib and its dependencies:

Install Tensorflow with:(PixelLib supports tensorflow 2.0 and above)

- pip3 install tensorflow

Install PixelLib with

- pip3 install pixellib

If installed, upgrade to the latest version using:

- pip3 install pixellib — upgrade

Object Extraction in Images Using Mask R-CNN COCO Model

import pixellibfrom pixellib.instance import instance_segmentationsegment_image=instance_segmentation()

segment_image.load_model(“mask_rcnn_coco.h5”)

Line1–4: We imported the PixelLib package, created an instance of the instance segmentation class and loaded the pretrained Coco model. Download the model from here.

segment_image.segmentImage("image_path", extract_segmented_objects=True, save_extracted_objects=True, show_bboxes=True, output_image_name="output.jpg")This is the final line of the code, where we called the function segmentImage with the following parameters:

image_path: This is the path to the image to be segmented.

extract_segmented_objects: This is the parameter that tells the function to extract the objects segmented in the image and it is set to true.

save_extracted_objects: This is an optional parameter for saving the extracted segmented objects.

show_bboxes: This is the parameter that shows segmented objects with bounding boxes. If it is set to false, it shows only the segmentation masks.

output_image_name: This is the path to save the output image.

Sample image

Full code for object extraction

Output Image

Extracted Objects from the image

Note: All the objects in the image are extracted and saved individually as an image. I displayed just few of them.

Segmentation of Specific Classes in Coco Model

We make use of a pretrained Mask R-CNN coco model to perform image segmentation. The coco model supports 80 classes of objects, but in some applications we may not want to segment all the objects it supports. Therefore, PixelLib has made it possible to filter unused detections and segment specific classes.

Modified code for Segmenting Specific Classes

target_classes = segment_image.select_target_classes(person=True)It is still the same code except we called a new function select_target_classes, to filter unused detections and segment only our target class which is person.

segment_image.segmentImage("sample.jpg", segment_target_classes=target_classes, extract_segmented_objects=True,save_extracted_objects=True, show_bboxes=True, output_image_name="output.jpg")In the segmentImage function we introduced a new parameter called segment_target_classes, to perform segmentation on the target class called from the select_target_classes function.

Wow! we were able to detect only the people in this image.

What of if we are only interested in detecting the means of transport of the people available in this picture?

target_classes = segment_image.select_target_classes(car=True, bicycle = True)We changed the target class from person to car and bicycle.

Beautiful Result! We detected only the bicycles and the cars available in this picture.

Note: If you filter coco model detections, it is the objects of the target class segmented in the image that would be extracted.

Object Extraction in Videos Using Coco Model

PixelLib supports the extraction of segmented objects in videos and camera feeds.

sample video

segment_video.process_video("sample.mp4", show_bboxes=True, extract_segmented_objects=True,save_extracted_objects=True, frames_per_second= 5, output_video_name="output.mp4")It is still the same code, except we changed the function from segmentImage to process_video. It takes the following parameters:

- show_bboxes: This is the parameter that shows segmented objects with bounding boxes. If it is set to false, it shows only the segmentation masks.

- frames_per_second: This is the parameter that sets the number of frames per second for the saved video file. In this case it is set to 5, i.e the saved video file would have 5 frames per second.

- extract_segmented_objects: This is the parameter that tells the function to extract the objects segmented in the image and it is set to true.

- save_extracted_objects: This is an optional parameter for saving the extracted segmented objects.

- output_video_name: This is the name of the saved segmented video.

Output Video

Extracted objects from the video

Note: All the objects in the video are extracted and saved individually as an image. I displayed just some of them.

Segmentation of Specific Classes in Videos

PixelLib makes it possible to filter unused detections and segment specific classes in videos and camera feeds.

target_classes = segment_video.select_target_classes(person=True)segment_video.process_video("sample.mp4", show_bboxes=True, segment_target_classes= target_classes, extract_segmented_objects=True,save_extracted_objects=True, frames_per_second= 5, output_video_name="output.mp4")

The target class for detection is set to person and we were able to segment only the people in the video.

target_classes = segment_video.select_target_classes(car = True)segment_video.process_video("sample.mp4", show_bboxes=True, segment_target_classes= target_classes, extract_segmented_objects=True,save_extracted_objects=True, frames_per_second= 5, output_video_name="output.mp4")

The target class for segmentation is set to car and we were able to segment only the cars in the video.

Full code for Segmenting Specific Classes and Object Extraction in Videos

Full code for Segmenting Specific Classes and Object Extraction in Camera Feeds

import cv2 capture = cv2.VideoCapture(0)We imported cv2 and included the code to capture camera’s frames.

segment_camera.process_camera(capture, show_bboxes=True, show_frames=True, extract_segmented_objects=True, save_extracted_objects=True,frame_name="frame", frames_per_second=5, output_video_name="output.mp4")In the code for performing segmentation, we replaced the video’s filepath to capture, i.e we are processing a stream of frames captured by the camera. We added extra parameters for the purpose of showing the camera’s frames:

- show_frames: This is the parameter that handles the showing of segmented camera’s frames.

- frame_name: This is the name given to the shown camera’s frame.

Object Extraction in Images Using Custom Models Trained with PixelLib

PixelLib supports training of a custom segmentation model and it is possible to extract objects segmented with a custom model.

import pixellib

from pixellib.instance import custom_segmentation segment_image = custom_segmentation()segment_image.inferConfig(num_classes=2, class_names=['BG', 'butterfly', 'squirrel'])

segment_image.load_model("Nature_model_resnet101.h5")

Line1–4: We imported the PixelLib package, created an instance of the custom segmentation class, called the inference configuration function (inferConfig) and loaded the custom model. Download the custom model from here. The custom model supports two classes which are as follows:

- Butterfly

- Squirrel

segment_image.segmentImage("image_path", extract_segmented_objects=True, ave_extracted_objects=True, show_bboxes=True, output_image_name="output.jpg")We called the same function segmentImage used for coco model detection.

Full Code for Object Extraction with A Custom Model

sample image

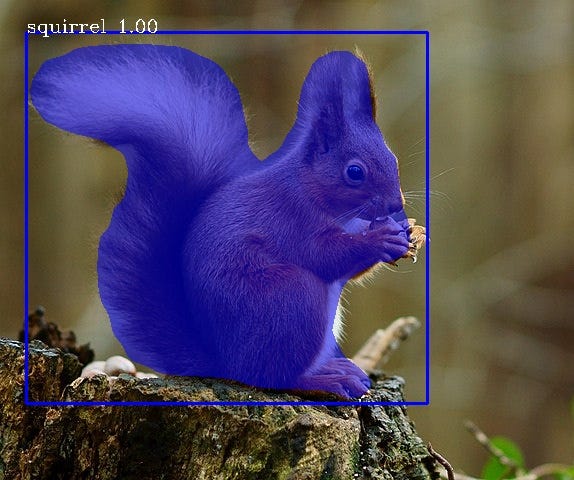

Output

Extracted Object from the image

Object Extraction in Videos Using A Custom Model Trained with PixelLib

sample video

Full Code for Object Extraction in Videos Using A Custom Model.

Output

Extracted Objects

Full Code for Object Extraction in Camera Feeds Using A Custom Model

Read this article to learn how to train a custom model with PixelLib.

Reach to me via:

Email: olafenwaayoola@gmail.com

Twitter: @AyoolaOlafenwa

Facebook: Ayoola Olafenwa

Linkedin: Ayoola Olafenwa

Check out these articles written on how to make use of PixelLib for semantic segmentation, instance segmentation and background editing in images and videos.